-

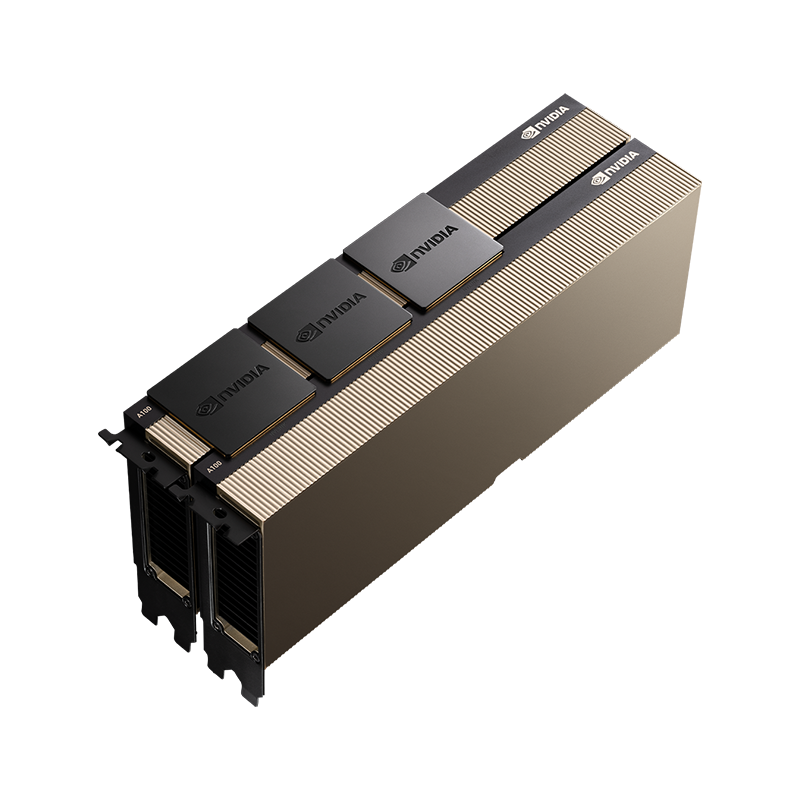

NVIDIA A100

-

Unprecedented Acceleration for World’s Highest-Performing Elastic Data Centers

-

NVIDIA A100

Overview

The NVIDIA A100 Tensor Core GPU delivers unprecedented acceleration—at every scale—to power the world’s highest performing elastic data centers for AI, data analytics, and high-performance computing (HPC) applications. As the engine of the NVIDIA data center platform, A100 provides up to 20x higher performance over the prior NVIDIA Volta generation. A100 can efficiently scale up or be partitioned into seven isolated GPU instances, with Multi-Instance GPU (MIG) providing a unified platform that enables elastic data centers to dynamically adjust to shifting workload demands.

-

NVIDIA A100

Overview

A100 is part of the complete NVIDIA data center solution that incorporates building blocks across hardware, networking, software, libraries, and optimized AI models and applications from NGC. Representing the most powerful end-to-end AI and HPC platform for data centers, it allows researchers to deliver real-world results and deploy solutions into production at scale, while allowing IT to optimize the utilization of every available A100 GPU.

-

NVIDIA Ampere-Based

Architecture

A100 accelerates workloads big and small. Whether using MIG to partition an A100 GPU into smaller instances, or NVLink to connect multiple GPUs to accelerate large-scale workloads, the A100 easily handles different-sized application needs, from the smallest job to the biggest multi-node workload.

-

NVIDIA A100

HBM2e

With 40 and 80 gigabytes (GB) of high-bandwidth memory (HBM2e), A100 delivers improved raw bandwidth of 1.6TB/sec, as well as higher dynamic random access memory (DRAM) utilization efficiency at 95 percent. A100 delivers 1.7x higher memory bandwidth over the previous generation.

-

Third-Generation

Tensor Cores

First introduced in the NVIDIA Volta architecture, NVIDIA Tensor Core technology has brought dramatic speedups to AI training and inference operations, bringing down training times from weeks to hours and providing massive acceleration to inference. The NVIDIA Ampere architecture builds upon these innovations by providing up to 20x higher FLOPS for AI. It does so by improving the performance of existing precisions and bringing new precisions—TF32, INT8, and FP64—that accelerate and simplify AI adoption and extend the power of NVIDIA Tensor Cores to HPC.

MAECENAS IACULIS

Vestibulum curae torquent diam diam commodo parturient penatibus nunc dui adipiscing convallis bulum parturient suspendisse parturient a.Parturient in parturient scelerisque nibh lectus quam a natoque adipiscing a vestibulum hendrerit et pharetra fames nunc natoque dui.

ADIPISCING CONVALLIS BULUM

- Vestibulum penatibus nunc dui adipiscing convallis bulum parturient suspendisse.

- Abitur parturient praesent lectus quam a natoque adipiscing a vestibulum hendre.

- Diam parturient dictumst parturient scelerisque nibh lectus.

Scelerisque adipiscing bibendum sem vestibulum et in a a a purus lectus faucibus lobortis tincidunt purus lectus nisl class eros.Condimentum a et ullamcorper dictumst mus et tristique elementum nam inceptos hac parturient scelerisque vestibulum amet elit ut volutpat.

Reviews

There are no reviews yet.